Introduction

The review was funded by the Nuffield Foundation and the Evidence for Policy and Practice Information and Co-ordinating Centre (EPPI Centre). It was carried out using methods originally developed by the EPPI Centre to review health promotion. To explain a complex process concisely: as much of the available research literature as possible is gathered on the topic under review. Each study is assessed using criteria concerned with validity and reliability, and the number of studies is thus whittled down. These studies are then used to draw any relevant conclusions, which can in turn be offered to the variety of audiences who wish to consider the implications of the findings.

This particular summary of the Assessment Review's key findings is provided for LEA Advisers and others who are in a position to offer support and guidance to schools in their management of summative assessment and tests.

The review methods

The review methods depend to some extent on precise definitions of the variables involved, so that findings can be properly compared with each other. In this case, some of the key variables proved difficult to define precisely. For example, different research studies adopted slightly different definitions of 'motivation', and different mechanisms for measuring it. The Review Group identified the main review question as, 'What is the evidence of the impact of summative assessment and testing on students' motivation for learning?' and operationalised this in terms of inclusion and exclusion criteria. Based on the application of these criteria, 19 studies were included in the review. The progressive focusing meant that attention was given to the most relevant studies. Within this number there was a wide range of national and cultural contexts, and a diverse set of other variables, including the numbers of students involved in different studies. Strong conclusions are difficult to draw in these circumstances, and those wishing to link research with practice really need to examine the individual reports with some care, paying attention to those with the most relevant contexts. What follows should therefore be read with that caveat.

The importance of context

Assessment policies and practice in the various parts of the United Kingdom have diverged significantly in recent years. It may be unhelpful therefore to offer very specific advice, which may not be relevant to some of these different systems. Readers within the UK and in other parts of the world will need to 'customise' what is offered to the context in which they work.

The definition of 'motivation'

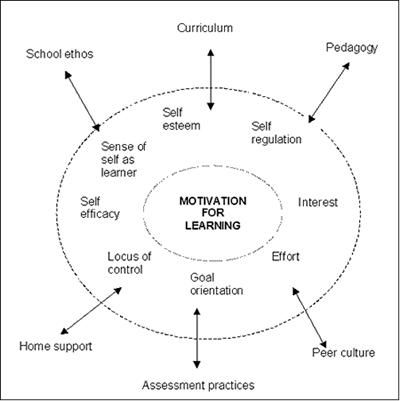

The authors discuss the meaning of motivation as concerned with the drive, incentive or energy to do something: 'Motivation is not a single entity but embraces, for example, effort, self-efficacy, self-regulation, interest, locus of control, self-esteem, goal orientation and learning disposition … motivation for learning is understood to be a form of energy which is experienced by learners and which drives their capacity to learn, adapt and change in response to internal and external stimuli'. The authors provide the diagram below (Figure 3 from the report) to help convey the complexity of motivation for learning, and to indicate that assessment is but one factor influencing it.

Some of the variables relating to motivation and factors affecting it

One of the most productive outcomes of the review could be a starting point for schools and school systems to review their beliefs and assumptions about motivation, the factors which affect it and the link between motivation, learning and teaching. Further, we may need to look again at the continuum from extrinsic to intrinsic, moving beyond simplistic distinctions into a more rigorous re-appraisal of how motivation is addressed, for different age groups and in different educational settings. Such a debate is a necessary precursor to making the best use of the review as a touchstone of policy advice and decisions.

The impact on teaching and the impact of teaching

One of the most significant parts of the review deals with what happens to teaching when teachers place a high priority on preparing students for summative assessment and tests. Some of the tests have very high-stake consequences for the students involved: the 11+ test, for example, will determine a student's future education and possibly his/her life chances. In the USA, where progression to the next grade level may be determined by test results, doors may be opened or closed to students because of their test performance. Other tests appear to have higher stakes consequences for schools and teachers than for the students themselves. In England, for example, OFSTED inspections may be triggered by test data analysis. Even when the consequences of these tests for the students are not significant, it is interesting to note that many students think that they are.

Where tests are considered to have high-stake consequences for either students or schools, or both, there is evidence that teachers narrow the range of teaching skills they use and focus on those strategies that most closely resemble the 'style' of the test questions. In some cases, teachers focus almost entirely on coaching students how to answer test questions, whether or not they really understand the material. In the long term, the students are then disadvantaged by appearing to be more proficient in their learning than they actually are, which can confuse or distract future teachers as well as the students and their parents.

The review concludes this very interesting section by suggesting teaching strategies that can reduce the possibly negative impact of summative testing on some students and some teachers. Among those suggestions highlighting the impact of teaching are the following:

- Avoid using fear of poor performance as a negative incentive;

- Avoid explicit coaching for students on how to pass the test, and using class time for repeat practice tests;

- De-mystify the tests themselves by explaining their purpose, techniques and implications;

- Provide feedback to students that is specific, related to clear criteria and non-judgemental, and is focused on the task, not the person.

Included in the list of suggestions of actions that would increase the positive impact of summative assessment are the following:

- Develop students' self-assessment skills and use of learning, rather than performance criteria, as part of a classroom environment that promotes self-regulated learning;

- Ensure that the demands of the test are consistent with the expectations of teachers and the capabilities of the students;

- Use assessment to convey a sense of learning progress to the students;

- Support low-achieving students' self-efficacy (ie belief in themselves as learners) by making learning goals explicit and showing them how to direct their efforts.

There is one other suggestion directed at national rather than local policy-makers:

- Reduce the 'stakes' attached to test results by broadening the base of information used in evaluating the effectiveness of schools, and similarly broadening the range of information used in assessing the attainment of students.

The impact of summative assessment on students

Most of the evidence presented in the review indicates a negative impact of summative assessment on the learning of some students. A range of reasons are cited, including:

- Lowering the self-esteem of less successful students, which reduces their effort;

- Test anxiety, which affects students differentially;

- Restricting learning opportunities by teaching which is focused on what is tested, and by teaching methods which favour particular approaches to learning.

One major study, however, argues that appropriate use of summative tests can improve the motivation of low-achieving students, especially where excellent feedback is used to focus the student on improvement.

In other words, there is ammunition within the review for a wide spectrum of existing views about the impact of testing on students. It remains an issue that individual schools will need to review and clarify for themselves. Above all, schools will need guidance and support in establishing assessment strategies that actually improve learning rather than merely measure it.

Can we test less and students achieve more?

One of the questions worth sharing with schools concerns their use of 'optional' national tests, and other tests that are designed to gather data about students' learning. There is a risk that requiring students to undergo more testing than the basic national requirements could have a damaging long-term effect on the self-esteem, self-efficacy and effort - and thereby the future test performance - of some students. The exact proportion vulnerable to such damage is hard to ascertain. The only reason for subjecting students to more tests, with the concomitant loss of teaching time, would be that the increased data resulting from the tests would somehow compensate by improving the quality of teachers' planning and teaching, and thus improving future test performance. This is a very tricky equation, about which schools should be advised to think long and hard before they take a step that could actually do more harm than good.