EPPI Reviewer allows to interrogate Large Language Models directly from within the user interface.

There are many possibilities for using LLMs in systematic reviews, and we need to evaluate them carefully before using them widely. For this reason, the use of this feature should be for evaluation purposes only. We do not currently recommend its use in real reviews, not without first testing the accuracy of results in a formal manner.

The feature is only available in reviews where it has been enabled by purchasing Credit and assigning said credit for use on LLMs in a given review. Credit can be purchased from the Account Manager, and then assigned to reviews (by clicking "Edit" for a given review, in the "Summary\Reviews" tab).

EPPI Reviewer implements a number of different LLMs, which is essential to conduct studies about how well they perform. For this reason, we expect the list of available LLMs to change during time (LLMs that cannot be relied upon may disappear, if any, more/newer LLMs will also appear).

To use the LLM tools within a particular review, you will need to add credit to your user account (or any other user account that has access to the review concerned). You can then use this credit to cover charges for using the LLM service. See here for further details.)

Once LLMs are enabled in a given review, please follow the following steps to use them:

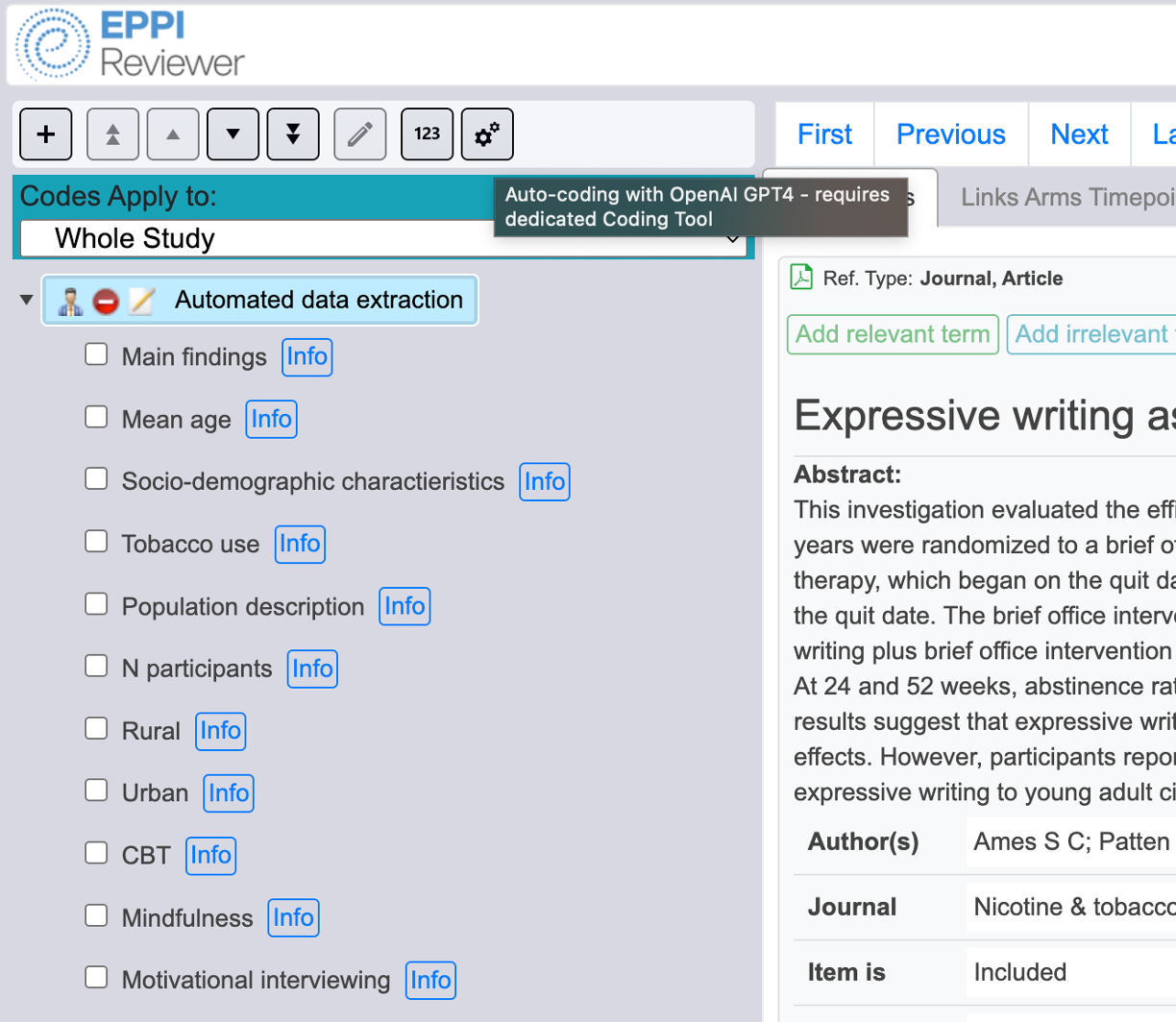

1. Create a code set

Create a code set using the standard tools and add the codes you want to extract information about (e.g. population and intervention characteristics). The text in the 'description' box is the prompt for the language model, and must follow a pre-specified format:

label: data type // prompt

The label should be a short, informative word or two which describes the data to be extracted or identified. The data type can be one of three types: number, boolean or string. If you specify a number, then the model will attempt to extract numeric information into the 'info' box. If you select the boolean datatype, then the model will look to see whether a given characteristic is present and the box will be 'ticked' or not ticked, depending on whether or not it is found. The text after the // is the prompt. This should request the model to find the specified information.

For example:

population_description: string // extract a detailed summary of the population characteristics

This will cause the model to summarise the population characteristics into the 'info' box.

population_number: number // the total number of participants in the study

This will cause the model to insert a number into the info box - the number of people in the study.

physical_activity: boolean // did the intervention contain a physical activity component?

This will cause the model to look for physical activity in the text describing the intervention. If it is found, then the box will be ticked. (And not ticked, if it wasn't found.)

2. Ensure that the correct text is present in the abstract field

Currently, the model works using the text in the abstract field. This can be quite long: up to 3,500 words. You can either simply use abstracts, or copy and paste relevant sections of papers into the abstract field. Be sure that it has saved before running the model.

3. Run the model

Once you have a data extraction / keywording tool prepared using the structure above, and you have the right text in the abstract field, it's time to run the model. Ensure that you have selected the root of your codeset on the left of the screen, and the 'auto-coding' button should appear. Click the button. You will be offered a list of LLMs and a few options. Make your choices, click "OK", and wait a few seconds. The results should appear in your codeset.

4. Evaluate results

As mentioned above, this feature is currently for evaluation only, so please do evaluate your results.

5. Run LLM-coding in batches

In the "References" tab of the main page, it is possible to submit batches of items for LLM-coding. Batches will be executed on a schedule, whith a configurable limit on how many concurrent jobs can run at any time. Current limit is set to 3. Batches can contain a maximum of 1000 items each.

To submit batches:

- Expand the "Codes" column and select the root of the coding tool containing your prompts. The "Robots" button will appear in the header of the Codes column.

- From the items list, select the items you wish to LLM-code, click on the Robots button.

- You will be offered to pick a specific LLM and to set a few options. Make your choices and click "submit".

- Your batch will be entered in the queue and you will see the list of queued jobs.

- Only scheduled and currently running batches appear in this list

- You can see the details of your own jobs and the size of batches submitted by other people

- To find out about older batches, click on "past jobs" and open the "Robot-coding" tab. You will get to see the list of jobs submitted for the current review.

- You can expand each job record and consult the error list, which, if present, will specify what item was affected.

- The past jobs record can also be used to find out how much was charged to run each job, how long it took to finish and more.

Full-text coding

When using batches, it is possible to tick the "Code using full text documents" option to submit text extracted from PDFs, rather than just title and abstract. This will trigger a "preliminary" job which sends the PDFs uploaded to the selected items to a custom-build Machine Learning module to parse the PDFs and extract their text in Markdown format. This job can be slow, processing one or few PDFs per minute; while it's running, the job progress will remain fixed on "Done Item 0 of N". EPPI Reviewer stores the Markdown extracted from PDFs indefinitely, so each PDF needs to be parsed only once and won't be parsed again if re-submitted.

Full-text batch jobs will submit much larger amounts of text to the LLM, resulting in significantly higher costs. Since LLMs are also relatively slow, full-text jobs are also slower, even after parsing all the PDF text in the "preliminary" phase described above.

How it works:

Behind the scenes, EPPI Reviewer collects the prompts present in the selected coding tool and uses them to produce a single request to the required LLM API (as provided via the Microsoft Azure platform), including the prompts, instructions, and the Title and Abstract of the Item in question. Upon receiving the response, it then applies the relevant coding, including the "additional text" returned by the API, as if a person was ticking the code checkbox and typing the additional text in the "info box".

As of version 6.15.3.0, the initial implementation has been extended in the following ways:

- Coding added by an LLM is added in the name of the robot itself (e.g. "OpenAI GPT4"). This ensures it is always possible to discriminate between coding produced by the robot and coding produced by humans.

- Adding the coding in the robot's name also allows you to set the relevant coding tool in "comparison mode", making the machine add its coding as "incomplete", and thus facilitating the comparison against "gold standard" coding (produced by actual people).

- By default, if the robot is asked to code an item when coding is already present (and completed) in the name of someone else, the robot will produce the coding as "incomplete", which hides it "behind" the complete version. This is the default behaviour as it guarantees that it will be always possible to discriminate between coding produced by the robot and coding produced by humans.

- The option to change this behaviour is automatically presented to users when appropriate. Un-ticking the "Always add coding in the Robot's name" option will make the resulting codes appear as part of the already present (and completed) coding, thus "filling in" the gaps (if any) but without overwriting any coding already present.

- For the same reason, by default, the robot adds its coding as "locked". This behaviour is useful to prevent accidental editing of the coding, which would blur the lines between "robot" and "human" coding.

- There is an option to change this behaviour too.

- The underlying GPT4 version was upgraded to use the latest version available (GPT4o).

Evaluation aids

In the home tab, "Coding progress" panel, review administrators have access to "all coding" reports, which will include completed and incomplete coding for the selected coding tool. Within the "progress" details of any given coding tool, the "More..." button provides access to Excel coding reports which are suitable for not-so-small evaluations of LLM-coding results. If you'll batch code against a coding tool in "Comparison data-entry" mode, the LLM will add its coding as incomplete, which allows to run "multiple coding" exercises using a mix of different LLMs and humans. The "all coding" excel report then allows to compare decisions and evaluate LLM accuracy. There is also the option to obtain the same data in a JSON report, which can be used for automated analyses.

The same area also contains a "Delete all coding, by this user, for this coding tool" function. You can use this function to quickly remove all coding added by an LLM, refine the prompts and re-submit batches. This is useful especially at the very beginning of the process, when prompts are likely to be very far from optimal and there is little/no need to record all the prompt-tweaking iteration details.

Version History

Most LLM models are explicitly designed to be continuously updated, however, some LLM APIs allow the client (EPPI Reviewer) to ask for a specific Model and version combination. EPPI Reviewer does in fact specify both model and version explicitly, as it is of paramount importance to keep track of them for the purpose of evaluations. Please refer to the version history below when compiling evaluation reports.

- 12 December 2023. Main Model: GPT4 8K. Version: 2023-07-01-preview.

- 18 June 2024. Main Model GPT4o. Version: 2024-02-01.

- 6 December 2024. The "Coding against the PDF full text" feature becomes available.

- 12 February 2025. The "credit" system allowing end users to pay for their own use of LLMs becomes available (until now, the EPPI Centre was shouldering all costs). Please see here for more details.

- 27 May 2025. Support for multiple LLMs is added, several OpenAI models are made available:

- OpenAI GPT4: This is the first LLM deployed in EPPI Reviewer, in 2024. The model used is OpenAI "GPT-4o" with "model version = 2024-08-06". GPT models are optimised for "Chat", making them quicker and more "creative" than reasoning models. Cost per million input tokens: £2. Cost per million output tokens: £8.

Gpt-4.1-nano: The model used is OpenAI "GPT-4.1-nano" with "model version = 2025-04-14". This is a quick and cheap model. Cost per million input tokens: £1. Cost per million output tokens: £1.

GPT-4.1: Latest model of the GPT series. The model used is OpenAI "GPT-4.1" with "model version = 2025-04-14". Cost per million input tokens: £2. Cost per million output tokens: £6.

o4-mini: The model used is OpenAI "o4-mini" with "model version = 2025-04-16". This is a reasoning model, optimised for speed/efficiency. Reasoning models iterate a little with the intention of giving more accurate answers (but evaluation is needed!). This makes them slower than other models though. Cost per million input tokens: £1. Cost per million output tokens: £3.

o3: The model used is OpenAI "o3" with "model version = 2025-04-16". This is the latest OpenAI reasoning model we can currently make available, so it's also slow and expensive. Cost per million input tokens: £8. Cost per million output tokens: £30.

- 3 July 2025. Added support to DeepSeek-R1. This is a slow reasoning model, which outputs an "answer", preceded by the "reasoning" text. EPPI Reviewer does not retain the Reasoning part of the response. Cost per million input tokens: £1. Cost per million output tokens: £4.

- 30 September 2025. Added support to:

- Llama 3.1. The model used is "Llama-3.1-405B-Instruct". This is a multilingual model optimised for assistant-like chat/tasks. Cost per million input tokens: £4. Cost per million output tokens: £12.

- Mistral Large 24.11. The model used is "Mistral Large 24.11". This is a multilingual reasoning model, (mostly) open source. Cost per million input tokens: £2. Cost per million output tokens: £5.